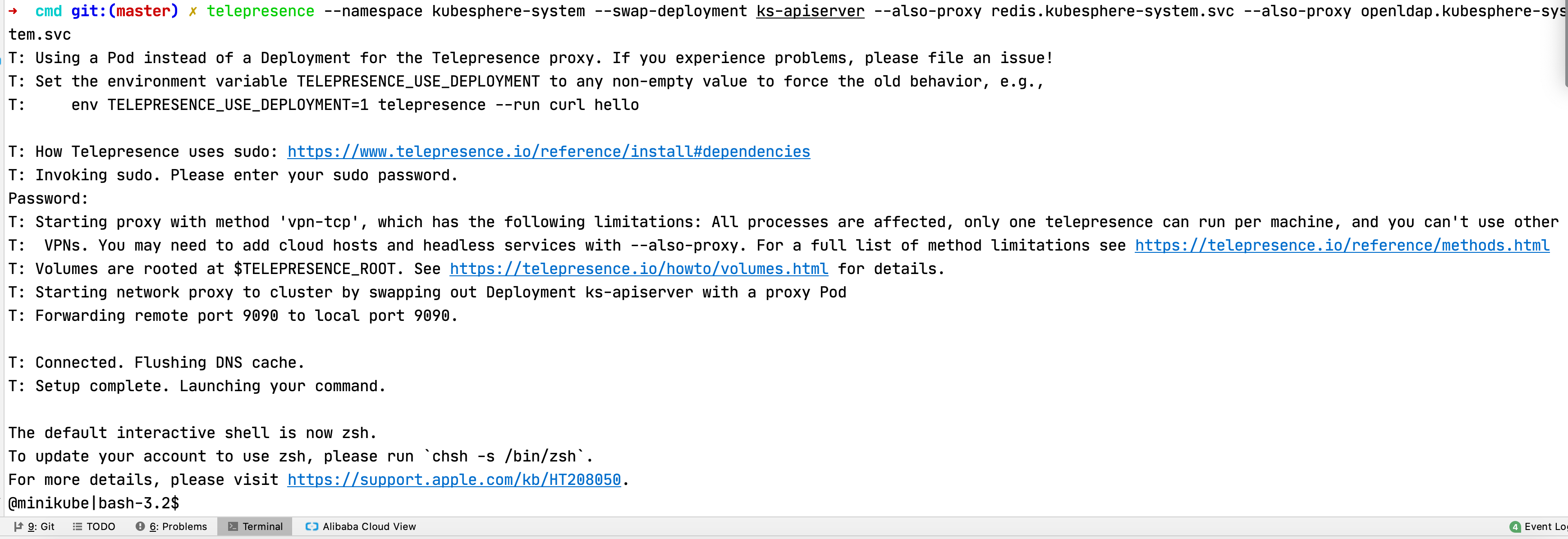

Jeff 大神我在telepresence加了个参数--method inject-tcp,好像就可以了,是什么原因呢,是因为这个headless svc的原因么

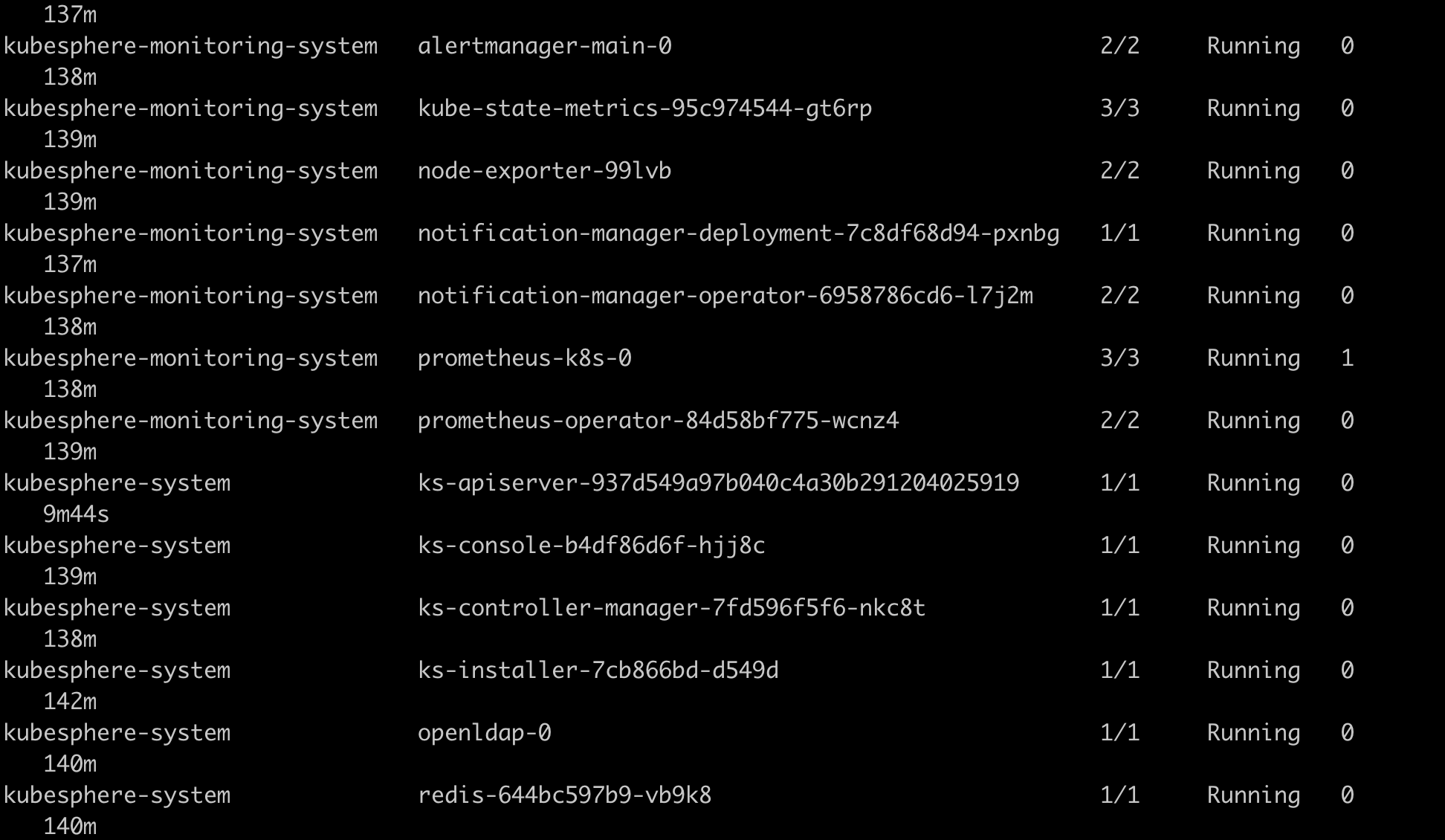

root@k8s-01:~# kubectl get svc -A

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default kubernetes ClusterIP 10.233.0.1 <none> 443/TCP 190d

kube-system coredns ClusterIP 10.233.0.3 <none> 53/UDP,53/TCP,9153/TCP 190d

kube-system etcd ClusterIP None <none> 2379/TCP 190d

kube-system kube-controller-manager-svc ClusterIP None <none> 10252/TCP 190d

kube-system kube-scheduler-svc ClusterIP None <none> 10251/TCP 190d

kube-system kubelet ClusterIP None <none> 10250/TCP,10255/TCP,4194/TCP 190d

kube-system metrics-server ClusterIP 10.233.24.198 <none> 443/TCP 190d

kubesphere-controls-system default-http-backend ClusterIP 10.233.47.72 <none> 80/TCP 190d

kubesphere-monitoring-system alertmanager-main ClusterIP 10.233.44.235 <none> 9093/TCP 190d

kubesphere-monitoring-system alertmanager-operated ClusterIP None <none> 9093/TCP,9094/TCP,9094/UDP 190d

kubesphere-monitoring-system kube-state-metrics ClusterIP None <none> 8443/TCP,9443/TCP 190d

kubesphere-monitoring-system node-exporter ClusterIP None <none> 9100/TCP 190d

kubesphere-monitoring-system notification-manager-controller-metrics ClusterIP 10.233.53.154 <none> 8443/TCP 190d

kubesphere-monitoring-system notification-manager-svc ClusterIP 10.233.9.248 <none> 19093/TCP 190d

kubesphere-monitoring-system prometheus-k8s ClusterIP 10.233.48.130 <none> 9090/TCP 190d

kubesphere-monitoring-system prometheus-operated ClusterIP None <none> 9090/TCP 190d

kubesphere-monitoring-system prometheus-operator ClusterIP None <none> 8443/TCP 190d

kubesphere-system ks-apiserver ClusterIP 10.233.21.82 <none> 80/TCP 190d

kubesphere-system ks-console NodePort 10.233.24.152 <none> 80:30880/TCP 190d

kubesphere-system ks-controller-manager ClusterIP 10.233.35.26 <none> 443/TCP 190d

kubesphere-system openldap ClusterIP None <none> 389/TCP 190d

kubesphere-system redis ClusterIP 10.233.59.1 <none> 6379/TCP 190d

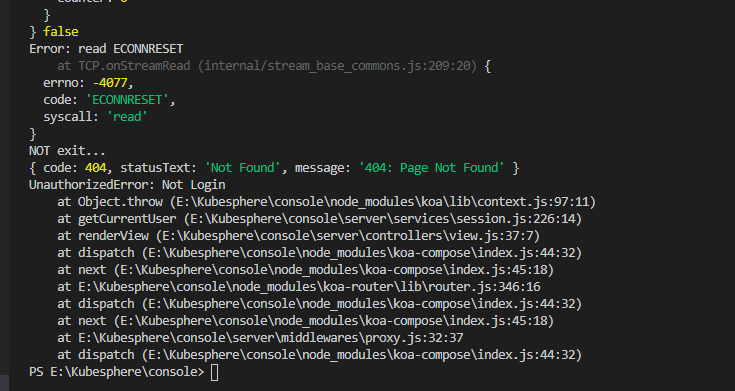

之前的ks-console日志:

{"log":"{ FetchError: request to http://ks-apiserver.kubesphere-system.svc/kapis/config.kubesphere.io/v1alpha2/configs/oauth failed, reason: socket hang up\n","stream":"stderr","time":"2021-08-12T08:17:25.154628598Z"}

{"log":" at ClientRequest.\u003canonymous\u003e (/opt/kubesphere/console/server/server.js:80604:11)\n","stream":"stderr","time":"2021-08-12T08:17:25.15465047Z"}

{"log":" at emitOne (events.js:116:13)\n","stream":"stderr","time":"2021-08-12T08:17:25.154656974Z"}

{"log":" at ClientRequest.emit (events.js:211:7)\n","stream":"stderr","time":"2021-08-12T08:17:25.154661551Z"}

{"log":" at Socket.socketOnEnd (_http_client.js:437:9)\n","stream":"stderr","time":"2021-08-12T08:17:25.15466586Z"}

{"log":" at emitNone (events.js:111:20)\n","stream":"stderr","time":"2021-08-12T08:17:25.154670278Z"}

{"log":" at Socket.emit (events.js:208:7)\n","stream":"stderr","time":"2021-08-12T08:17:25.154674514Z"}

{"log":" at endReadableNT (_stream_readable.js:1064:12)\n","stream":"stderr","time":"2021-08-12T08:17:25.154678527Z"}

{"log":" at _combinedTickCallback (internal/process/next_tick.js:139:11)\n","stream":"stderr","time":"2021-08-12T08:17:25.154682732Z"}

{"log":" at process._tickCallback (internal/process/next_tick.js:181:9)\n","stream":"stderr","time":"2021-08-12T08:17:25.154687012Z"}

{"log":" message: 'request to http://ks-apiserver.kubesphere-system.svc/kapis/config.kubesphere.io/v1alpha2/configs/oauth failed, reason: socket hang up',\n","stream":"stderr","time":"2021-08-12T08:17:25.154691412Z"}

{"log":" type: 'system',\n","stream":"stderr","time":"2021-08-12T08:17:25.154696141Z"}

{"log":" errno: 'ECONNRESET',\n","stream":"stderr","time":"2021-08-12T08:17:25.154700064Z"}

{"log":" code: 'ECONNRESET' }\n","stream":"stderr","time":"2021-08-12T08:17:25.154704175Z"}

{"log":" --\u003e GET /login 200 9ms 14.82kb 2021/08/12T16:17:25.159\n","stream":"stdout","time":"2021-08-12T08:17:25.159315736Z"}

{"log":" \u003c-- GET /kapis/resources.kubesphere.io/v1alpha2/components 2021/08/12T16:17:27.421\n","stream":"stdout","time":"2021-08-12T08:17:27.421992195Z"}

{"log":" \u003c-- GET /kapis/resources.kubesphere.io/v1alpha3/deployments?sortBy=updateTime\u0026limit=10 2021/08/12T16:17:29.688\n","stream":"stdout","time":"2021-08-12T08:17:29.689260211Z"}

{"log":" \u003c-- GET / 2021/08/12T16:17:35.147\n","stream":"stdout","time":"2021-08-12T08:17:35.148138272Z"}

FeynmanK零SK贰SK壹S

FeynmanK零SK贰SK壹S